(1) Feng Liang, The University of Texas at Austin and Work partially done during an internship at Meta GenAI (Email: [email protected]);

(2) Bichen Wu, Meta GenAI and Corresponding author;

(3) Jialiang Wang, Meta GenAI;

(4) Licheng Yu, Meta GenAI;

(5) Kunpeng Li, Meta GenAI;

(6) Yinan Zhao, Meta GenAI;

(7) Ishan Misra, Meta GenAI;

(8) Jia-Bin Huang, Meta GenAI;

(9) Peizhao Zhang, Meta GenAI (Email: [email protected]);

(10) Peter Vajda, Meta GenAI (Email: [email protected]);

(11) Diana Marculescu, The University of Texas at Austin (Email: [email protected]).

Table of Links

- Abstract and Introduction

- 2. Related Work

- 3. Preliminary

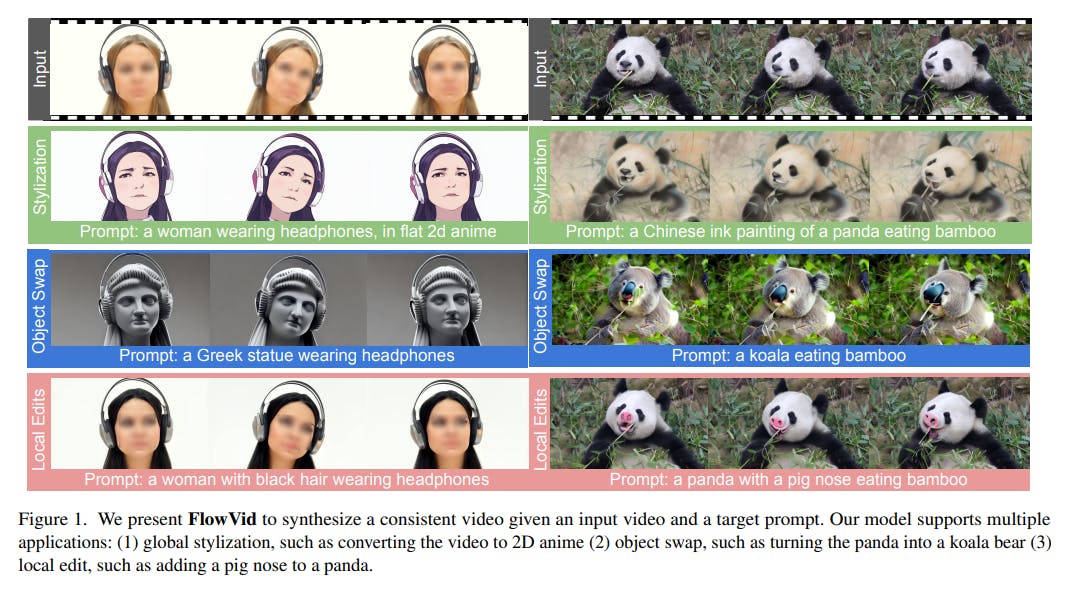

- 4. FlowVid

- 4.1. Inflating image U-Net to accommodate video

- 4.2. Training with joint spatial-temporal conditions

- 4.3. Generation: edit the first frame then propagate

-

- Experiments

- 5.1. Settings

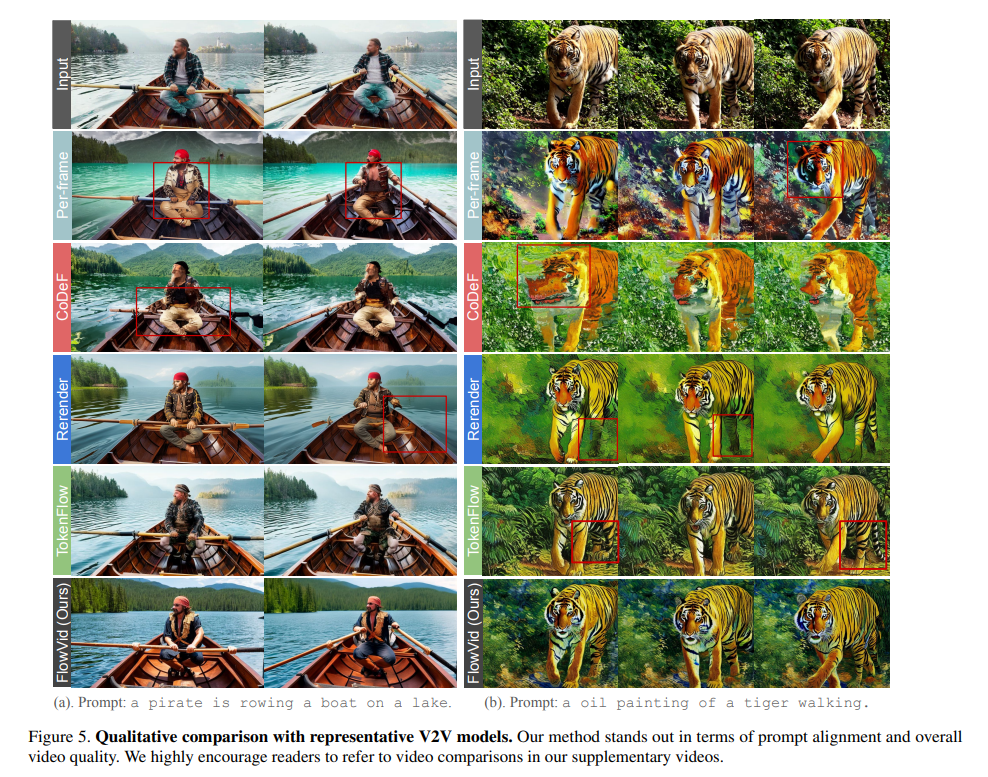

- 5.2. Qualitative results

- 5.3. Quantitative results

- 5.4. Ablation study and 5.5. Limitations

- Conclusion, Acknowledgments and References

- A. Webpage Demo and B. Quantitative comparisons

5. Experiments

5.1. Settings

Implementation Details We train our model with 100k videos from Shutterstock [1]. For each training video, we sequentially sample 16 frames with interval {2,4,8}, which represent videos lasting {1,2,4} seconds (taking videos with FPS of 30). The resolution of all images, including input frames, spatial condition images, and flow warped frames, is set to 512×512 via center crop. We train the model with a batch size of 1 per GPU and a total batch size of 8 with 8 GPUs. We employ AdamW optimizer [28] with a learning rate of 1e-5 for 100k iterations. As detailed in our method, we train the major U-Net and ControlNet U-Net joint branches with v-parameterization [41]. The training takes four days on one 8-A100-80G node.

During generation, we first generate keyframes with our trained model and then use an off-the-shelf frame interpolation model, such as RIFE [21], to generate non-key frames. By default, we produce 16 key frames at an interval of 4, corresponding to a 2-second clip at 8 FPS. Then, we use RIFE to interpolate the results to 32 FPS. We employ classifier-free guidance [15] with a scale of 7.5 and use 20 inference sampling steps. Additionally, the Zero SNR noise scheduler [27] is utilized. We also fuse the self-attention features obtained during the DDIM inversion of corresponding key frames from the input video, following FateZero [35]. We evaluate our FlowVid with two different spatial conditions: canny edge maps [5] and depth maps [38]. A comparison of these controls can be found in Section 5.4.

Evaluation We select the 25 object-centric videos from the public DAVIS dataset [34], covering humans, animals, etc We manually design 115 prompts for these videos, spanning from stylization to object swap. Besides, we also collect 50 Shutterstock videos [1] with 200 designed prompts. We conduct both qualitative (see Section 5.2) and quantitative comparisons (see Section 5.3) with state-of-the-art methods including Rerender [49], CoDeF [32] and TokenFlow [13]. We use their official codes with the default settings.

This paper is available on arxiv under CC 4.0 license.